WebRTC is a significant part of the live streaming landscape and the Softvelum team provides a wide range of related features in our products. On the ingest side, we have WHIP input at Nimble Streamer and WHIP output from Larix Broadcaster.

Nimble Streamer uses Pion implementation of WebRTC API. This framework not only gives the flexible API but also provides high-performance and low resource usage which completely correlates with our own approach to creating sustainable and cost-effective software.

We’d like to thank Sean DuBois and all Pion contributors for maintaining such a great framework.

Playback is an important piece for building ultra-low latency delivery networks. That’s why we focused on a reliable, interoperable, and extensible way to implement it.

WHEP Playback

WebRTC WHEP (WebRTC HTTP Egress Protocol) provides easy communication between a server and a client while being interoperable with other solutions that support WHEP signaling. Our team always prefers to rely on open standards so we chose WHEP as the best option available.

WHEP is a result of industry cooperation, thanks to Sergio Murillo and Cheng Chen who developed it into an IETF standard draft.

We implemented WHEP playback support in Nimble Streamer along with providing WHEP JS Player to customers’ reference. You can read WHEP WebRTC low latency playback article and watch our WebRTC WHEP Playback setup video tutorial about setting and using this feature.

Nimble Streamer generates WHEP playback output with the following codecs:

- Video: H.264/AVC, VP8 and VP9

- Audio: Opus

You can refer to the supported codecs page to see how you can deliver the pre-encoded content and re-package it without additional action. For instance, you can ingest VP8 video and Opus audio using WebRTC WHIP and generate WHEP playback as is, with no additional overhead.

If your source has different codecs then you can transcode the content. If you get RTMP with H.264 video and AAC audio from your source, you need to use Live Transcoder to transcode AAC into Opus. This is required because AAC is not supported in WebRTC. And you can pass through H.264 with no need for decoding and encoding as it’s supported everywhere.

Adaptive bitrate playback

WebRTC customers who use WHEP for their last mile low latency delivery, expect to provide the best user experience for their viewers. For regular-latency protocols like HLS or DASH this includes adaptive bitrate (ABR) to change the stream resolution (thus throughput) according to the viewer’s network conditions.

WebRTC brings a challenge when it comes to delivering ABR streams for multiple viewers.

A media server has several problems to solve at this point:

- correctly make bandwidth estimation for each viewer,

- adjust the bitrate for every viewer’s session accordingly,

- do that with low resource usage

Bandwidth estimation in WebRTC is a very complex task. Different media servers and libraries do it in their own way and there’s not too much information about the underlying algorithms. Handling ABR for each user and keeping the resource usage at some decent level is another challenge on top of the algorithm. High performance is one of the key advantages of Nimble Streamer – we always developed our product with high performance in mind, being the most efficient product in our class. Using the Pion framework, we could meet this expectation with WebRTC implementation, thanks to Pion’s architecture and high optimization.

Inside of WebRTC adaptive bitrate streaming algorithm from Softvelum – we released a separate article explaining in details how our algorithm works and what are its benefits.

Our algorithm gives the best cost of ownership for our customers and a great user experience for their consumers.

Now let’s see how to set up WHEP ABR streaming for Nimble Streamer.

Prerequisites

This article assumes that you have basic experience with Nimble Streamer setup and usage, so here is a list of requirements for WHEP playback that you probably already comply with.

- You must have a paid WMSPanel account. If you don’t have one, you need to sign up. You also need a valid Live Transcoder license. WMSPanel account must have an active subscription.

- Nimble Streamer and Live Transcoder must be installed. The installation steps are fully covered on Nimble Streamer installation and Live Transcoder installation pages.

- Make sure that the SSL certificates are issued, valid and installed on your server, as described in our documentation.

- Set the WHEP single output playback as described in our documentation article or YouTube video tutorial. Make sure that a single-resolution video is played fine with the HTTPS URL provided in WMSPanel during the setup.

Also, check live streaming setup articles for the respective protocols that you will use as an input.

Setting inputs

Let’s start the ABR WHEP setup.

Input protocols and codecs

The Nimble Streamer’s versatility allows using any supported video stream inputs for this feature. Among the most popular input formats for streaming video are RTMP, SRT, NDI and WebRTC WHIP. However, other supported protocols are also available for ingest such as Zixi, RTSP or RIST. The input live stream can be pulled or pushed, and a Playout output can also be utilized as a source for WebRTC WHEP output. Please refer to supported codecs and protocols page as well.

We assume you know how to set up the ingestion of a required protocol. Please read our documentation on the protocols-related pages or live streaming digest page and our YouTube channel for more information. You can also contact our helpdesk if you need help at this point.

Transcode video input into various resolutions

Once you have the input stream in Nimble, you must transcode it into several resolutions before setting an ABR output. You may use ABR Wizard for that or create a scenario in Transcoder manually with the source decoder, filters and encoders.

Let’s say we received a good old Big Buck Bunny video as a live stream to Nimble, no matter how – RTMP or some other protocol. It’s named as ‘live/bbb‘.

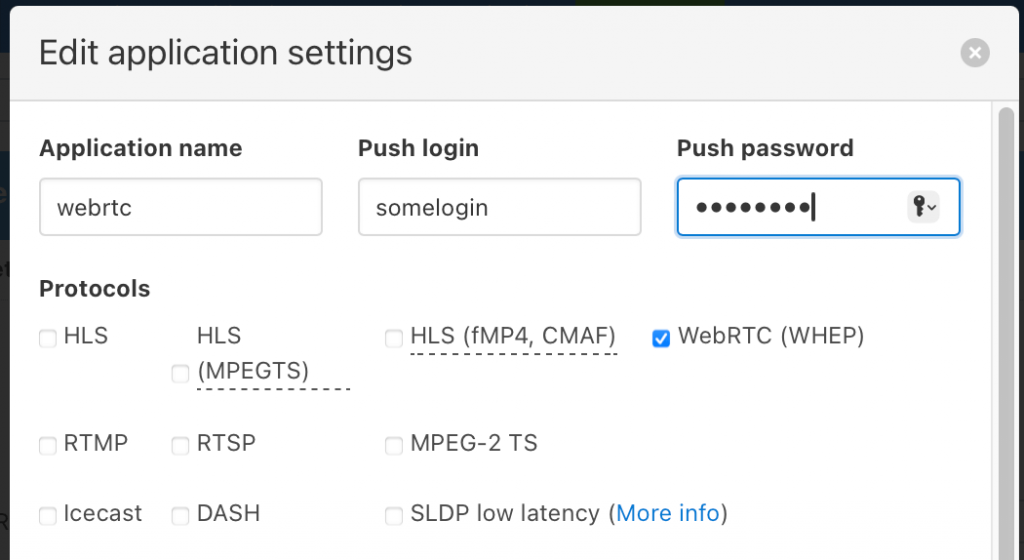

We start by creating a “webrtc” application that only outputs WebRTC, selecting the relevant checkbox in its settings. You may have other output protocols defined if required.

Let’s create a scenario manually. Navigate to Transcoders menu, and click the Create new Scenario button. Edit the scenario’s name by the ‘pencil‘ icon, set description, tags, and click the Out-of-process check box for better stability.

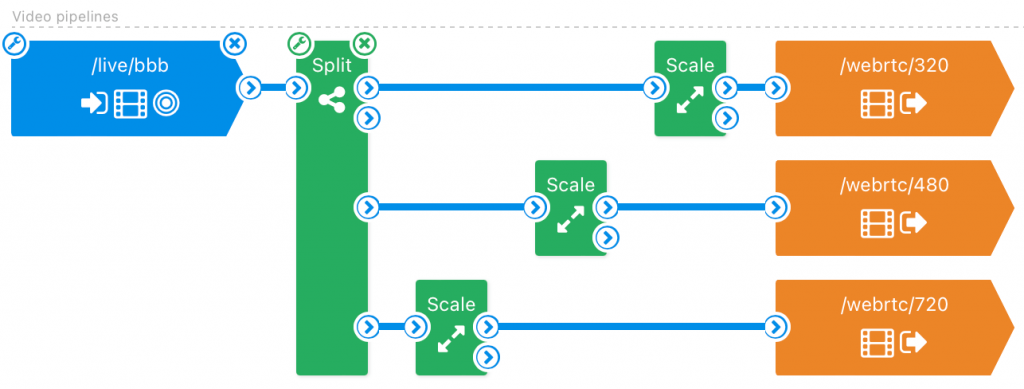

Next, put ‘live/bbb’ video in a Transcoder’s Video pipeline as the Video source. Set the Split filter to produce several identical inputs for the Scale filters you will set next. The Scale filters will reduce the initial resolution to the resolution specified in its parameters.

Link Split and Scale filters and then set several Video encoders.

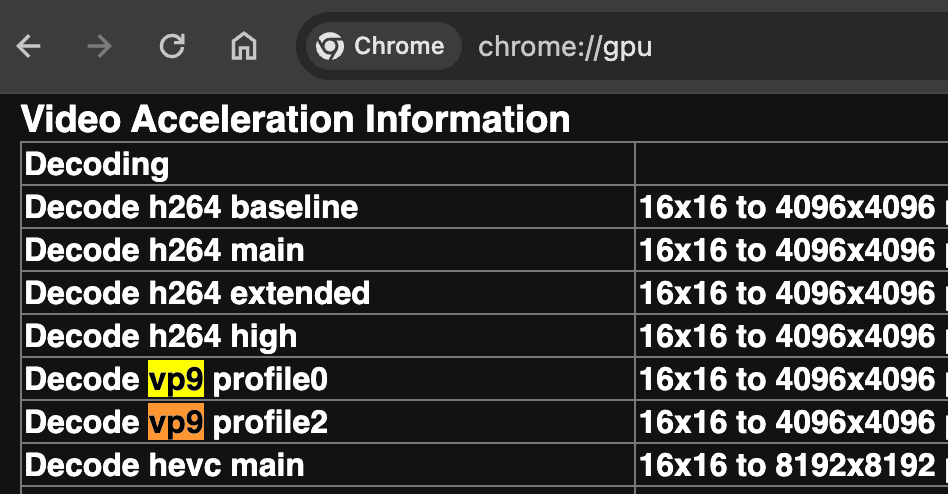

Supported WebRTC video codecs are H264, VP8 and VP9, but the choice depends on the end device’s capabilities, platform and browser.

Let’s say you want to stream to some macOS users, who are using either Safari or Chrome browsers. We still cannot use VP9 on Safari (despite all the rumors), so your only choice is H264 for it. On the other hand, Chrome for macOS has VP9 support, if the hardware used has acceleration support, like recent MacBooks. To ensure the VP9 decoding support is available, search for it on the ‘chrome://gpu/’ page.

You may use multiple codecs in the same ABR WHEP stream. But you must create one full encoding ladder for each codec for the streams that will be part of the ABR stream. E.g. if you want to support H264 and VP8 with 360, 480 and 720 resolutions, then you need to create those three output resolutions for each codec and make the ABR stream with 6 streams total. See Setting WHEP ABR output section below for setup details.

We recommend creating ABR streams with both H264 and VP8 for best compatibility with most platforms.

Please refer to the following documentation pages for details on the encoder’s options:

At this point we have several video stream outputs created from our single live source, as shown in the scenario screenshot above.

Transcode audio to Opus

The audio needs some attention as well.

First of all, only audio from the initial stream is required. One audio track in one stream provides audio to all other resolutions.

Here’s the audio pipeline in our scenario:

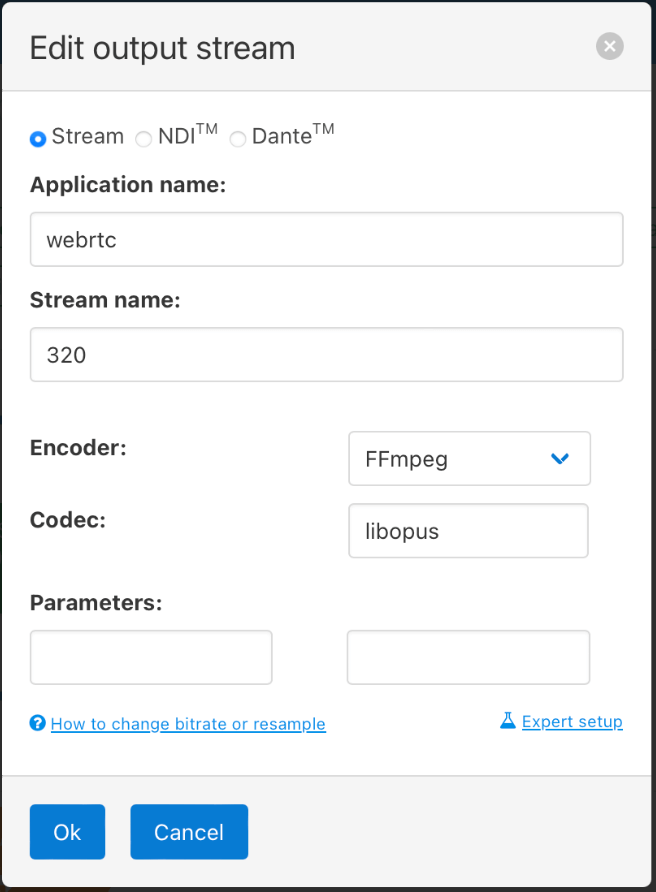

WebRTC uses Opus codec for audio encoding mainly. Choose FFmpeg as encoder and select libopus.

This encoder has a distinctive audio optimization for 48kHz. It does not support 44kHz audio. The supported range for the Opus sample rates is 8, 12, 16, 24, and 48 kHz.

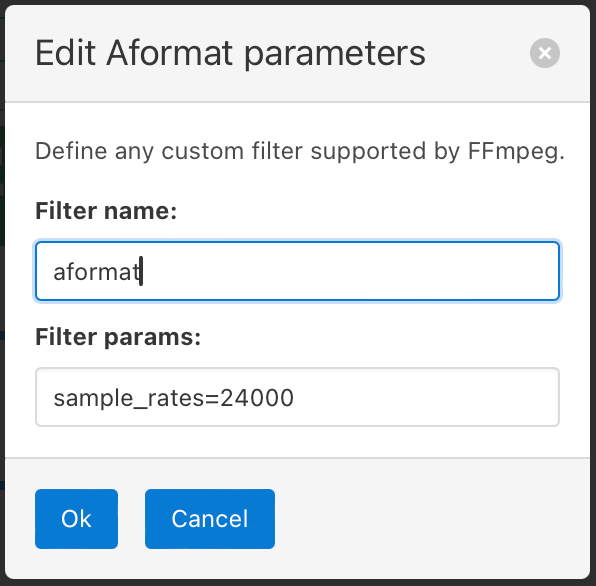

Thus, if your source has 44kHz audio, the aformat filter is mandatory before the libopus encoder. Otherwise, Transcoder will fail to produce audio.

Also please refer to Re-sampling audio in Nimble Streamer article regarding on aformat filter usage in Transcoder.

Setting WHEP ABR output

Now we’ll combine the streams produced by the Transcoder into a single WHEP ABR stream.

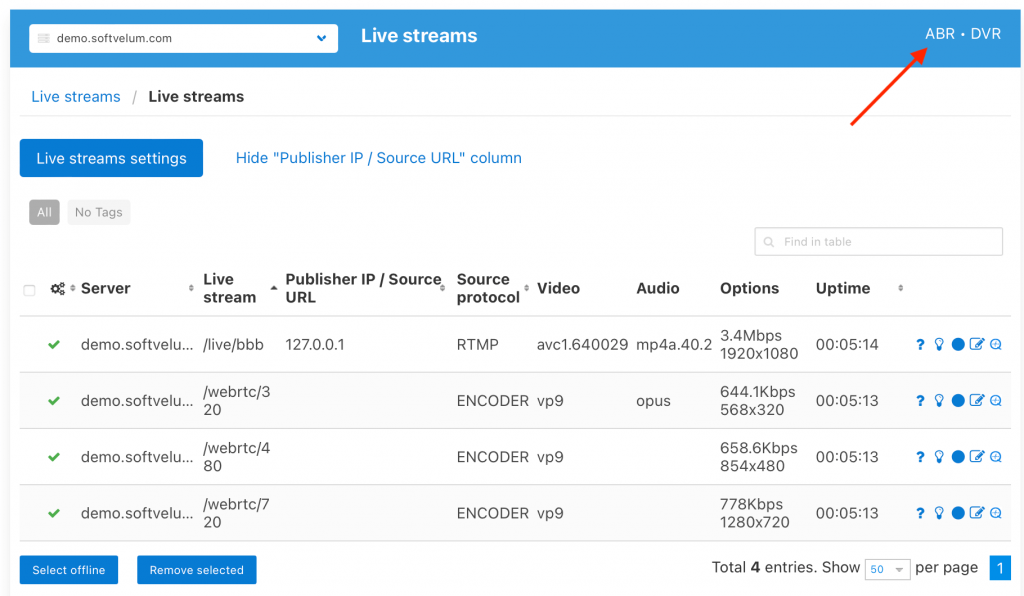

Click on the Nimble Streamer / Live Streams menu and find the ‘ABR’ link at the upper right corner of the ‘Live Streams’ dialog.

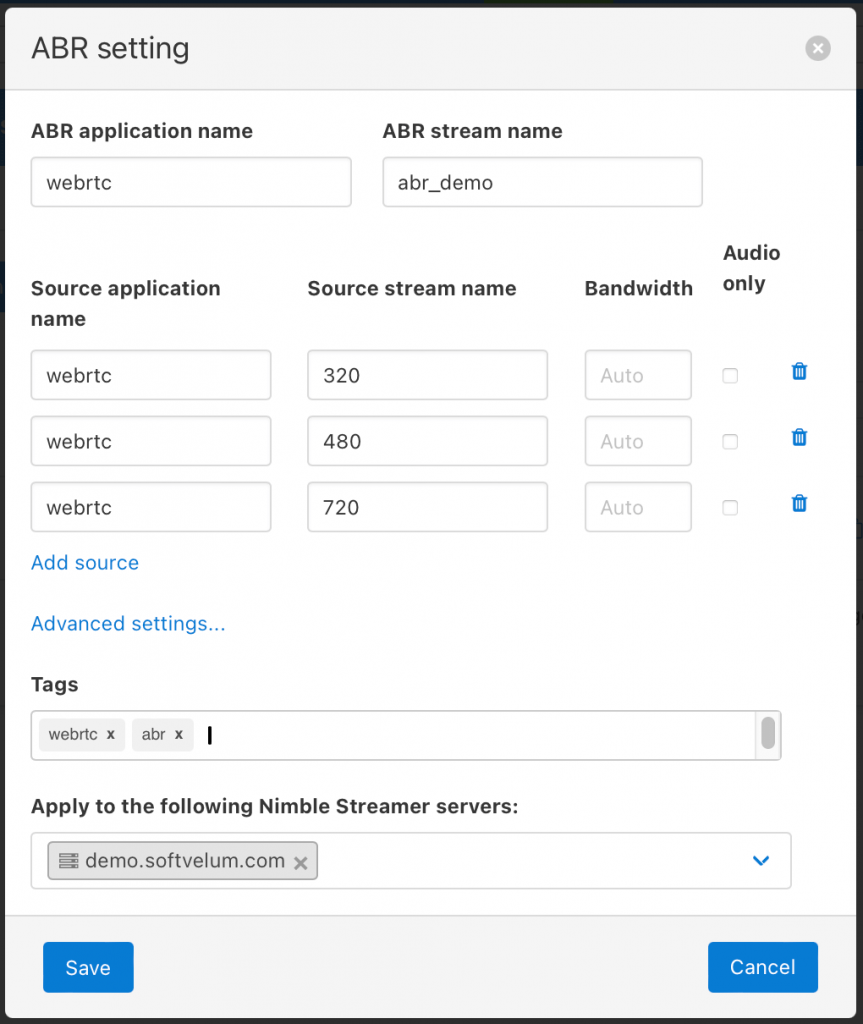

You will be redirected to ABR streams page with the list of existing ABR settings. Click the Add ABR setting button, the ABR settings dialog will appear.

In this dialog, you need to fill in the application and stream names which we prepared in the Transcoder. Do not use Bandwidth and Advanced settings, as they do not apply to the WebRTC stream.

The procedure is similar to the HLS ABR setup described in ABR live streaming setup article.

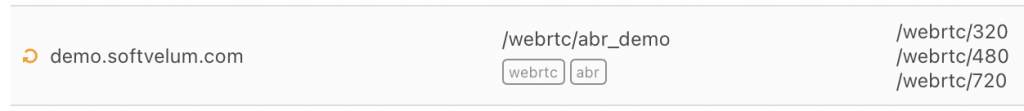

When you click Save, you’ll see a new record on the ABR Streams page for our WHEP ABR stream, displaying the streams that are used as different renditions.

If you click on a ‘?’ icon on this line, you will get the WHEP ABR URL, which will look like the following:

https://demo.softvelum.com/demo/abr_demo/whep.stream

From now, WHEP ABR is available from the server, and we are ready to play the ABR stream.

Playing the stream

We provide pages for testing WebRTC WHEP streams:

Our WebRTC player is a fork of Eyevinn player and it’s freely available in our Github repository.

However, you are free to try other WebRTC WHEP video players for ABR, like Eyevinn itself, it works just fine.

Paste the WHEP ABR URL into the player and you’ll see the stream.

Nimble Streamer server will check the client’s response time to calculate the bandwidth and will provide the lowest resolution to the video player first.

Nimble will track the client’s networking conditions, such as packet drops, to switch to the best suitable resolution of the stream. If no packet drops occur, the resolution (bitrate) will be increased. Too many packet drops detected will make Nimble push a lower bitrate stream to the client.

Unlike for HLS, there’s no option to forcing a specific resolution from the video player (client’s) side for WHEP.

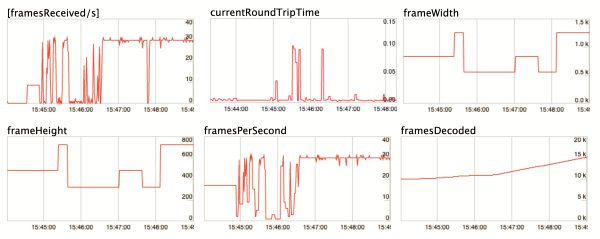

Chrome browser allows monitoring client-side WebRTC stats at chrome://webrtc-internals/ page as shown here for our example:

For instance, on this screenshot you can see that increasing RTT time decreases the resolution of a stream and vice versa.

Playback doesn’t work?

Some things need to be considered if a stream has not started playing:

- The codecs supported by the hardware and browser. Ensure that your browser and hardware are compatible with the codec you’ve selected for the stream.

- WebRTC WHEP uses SSL. It means you must have a proper SSL setup and valid SSL certificates in use, overwise the stream won’t start. So if the stream doesn’t work, please check your browser console for SSL errors first. If the unsigned certificate is used in a test environment, you need to accept it as trustworthy for a browser.

- Check if single renditions are generated correctly, and check if /var/log/nimble/nimble.log file doesn’t contain errors in the [encoder] section regarding them.

Video tutorial

Watch this video tutorial to build SRT to WHEP streaming pipeline with Nimble Streamer.

Exclusive bonus track: WHEP Load Tester

Creating this kind of complex functionality requires proper testing. It’s especially important to test performance, as we had to make it as best as possible.

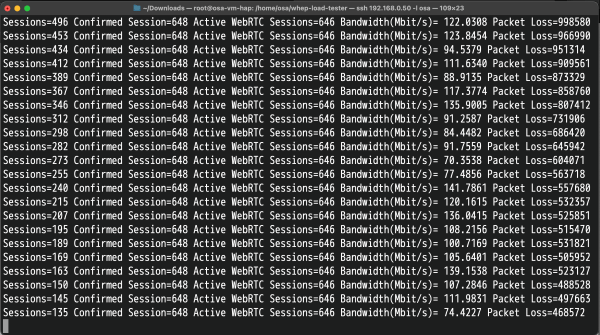

That is why we have developed the WHEP Load Tester, a tool specifically designed to evaluate the performance of WHEP WebRTC playback. This utility allows you to run multiple simultaneous playback sessions for a given WHEP stream, allowing you to test the capacity and performance limits of your WHEP WebRTC solution. It has been instrumental in helping our team optimize the Nimble Streamer implementation of WHEP ABR to make sure it provides top-notch performance.

Get the tool at whep-load-tester repository with the git client:

git clone https://github.com/Softvelum/whep-load-tester

Next, go to the created folder and execute the build procedure:

cd whep-load-tester

go build

Notice: If you face malformed module path “crypto/ecdh” error while building whep_loader, please upgrade to the latest Go version from the official website.

Once the build is complete, you can run the utility by specifying WHEP URL to test and the number of instances to run.

The basic syntax for running the WHEP load tester is as follows:

$ ./whep_loader -whep-addr <URL> -whep-sessions <number>

Parameters are:

-whep-addr <URL>: This parameter specifies the URL of the WHEP playback stream you want to test. It is a required parameter.

-whep-sessions <number>: This optional parameter defines the number of simultaneous sessions to run for the specified WHEP stream. If not provided, the default value is 1.

--help: Use this parameter to display the tool’s description and usage instructions.

To illustrate the use of load tester, let’s consider a scenario where you want to test the load capacity of a WHEP stream located at http://demo.softvelum.com/demo/abr with 1000 simultaneous playback sessions. The command would look like this:

./whep_loader -whep-addr https://demo.softvelum.com/demo/abr/whep.stream -whep-sessions 1000

Here’s the output:

By simulating multiple concurrent sessions, you can optimize performance, check scalability and guarantee the reliability of your streaming service, whether you’re preparing for a major live event or simply making sure that your day-to-day streaming operations run smoothly.

We’re looking forward to getting thoughts and feedback from the WebRTC community regarding our WebRTC feature set and WHEP playback in particular.