It was June 26th, 2024, and a group of 94 boats was cruising down the Seine river in Paris, carrying more than ten thousand Olympians towards the opening of the biggest international sports event of the year. Millions of viewers from across the globe were watching athletes on each boat from close angles. However, no camera crews were seen on board. Instead, a few mobile phones could be noticed on bows and sides of the vessels. Those were Samsung smartphones streaming directly to the live production facility.

Just four years earlier, this kind of mobile-only setup was used by the NFL in their first-ever virtual draft of 2020 during the pandemic. The production team used mobile kits with iPhones to stream directly from the homes of prospects, coaches, and managers. This mobile-centered remote production approach basically saved the day and made the ceremony unforgettable.

From the early Periscope streaming via the first iPhones more than a decade ago to modern setups with additional gear and private networks, progress has advanced, and now a simple smartphone has become an important part of a live production setup.

Mobile streaming has become a significant part of the Remote Integration Model (REMI), an approach that enables you to create collaborative live content regardless of your location.

A mobile device with the right software is now a tool that can replace some of the traditional live production equipment such as low- and mid-tier cameras and encoders. This reduces both upfront capital expenses, like equipment purchases, and operational costs for live content creators.

So how is that replacement possible?

First, let’s check the hardware.

Mobile Phone Hardware Capabilities

The current duopoly in the world of mobile devices has divided users. If you run live streaming production, your creators and contributors will likely belong to one of these two camps.

Apple dominates in many countries’ markets with its iPhone, positioning it as a symbol of design and innovation. Top-notch cameras, amazing display, and overall user experience make it a great choice for any creator. No wonder, there are whole movies shot completely with iPhones.

The Google ecosystem, with help of countless vendors, provides a huge number of phones running Android. Devices of any size, shape, capabilities, and cost are available to users everywhere. Within our team, we refer to this variety as a ‘zoo of devices’. Cost is the key here: you can get a device with a decent camera and a good display with a fraction of the cost of an iPhone. And most probably the level of capabilities and the output quality will be comparable with those from Apple.

From a live streaming creator’s perspective, the most important feature is the camera. All other components are either the same across all devices (like available network interfaces) or they are not used during live streaming (like still image capture features).

All devices provide the ability to stream Full HD 1080p video at 30 FPS, which is the format most commonly used in live events these days. They all support H.264/AVC and H.265/HEVC video. This way, a producer has a choice of codec for incoming content to save bandwidth and ensure transmission even on a poor mobile connection. Some more advanced formats, like 50 FPS, better suited for TV or 4K resolution for high-end quality events, are also supported to provide the full scale of capabilities.

Whatever device your operators have, or whatever you choose to buy as a producer, any device is capable of generating a high-quality picture using various lenses and on-board sensors.

Note that most Android vendors don’t give third parties access to certain camera features. From our experience we know that you won’t get 60FPS from Samsung cameras when using any non-Samsung apps. This limitation applies to the vast majority of vendors. It’s just blocked on API level and we can’t change that in a stock app with a standard level of hardware access.

The only great exception to this is any latest Google Pixel family of phones. Google always designs its flagship device to showcase the full capabilities of its hardware and operating system, so owning a Pixel phone allows you to access all available features in any third-party application.

As for iPhones, they grant third parties access to camera features, enabling you to stream Dolby Vision HDR at 60 FPS in 4K on the latest hardware without issues. Well, if you have the bandwidth to push this through, of course.

So the choice between iPhone and Android devices is yours and it depends on your current “hardware habits”. By default, any latest iPhone or top-tier Android phone will work great for any kind of live streaming scenario – both indoors and outdoors. Our team prefers iPhones just due to their stability during various streaming tests.

As for the price comparison – you do your math yourself, as you know how much your cameras cost you and how much it would cost to replace them. Our bet is that your phone in your pocket is much cheaper.

We also have to mention the obvious while we talk about the hardware. A mobile phone is much smaller compared to transitional cameras. If your contributors are out in the fields, size and weight matter. And when they take their phone out, put it on a small GoPro-like mount and push the button, the job is still done but without any extra elements involved. Even if they want to use a tripod or a gimbal, set an external mic or light, it’s still basically a small-size setup.

Software to rule them all

When you use a traditional camera, it works out of the box: just plug it into the transmission hardware and press the button.

When you want to stream content from a mobile device, you need additional software. This may seem like a downside, but in fact, it brings more freedom of choice and flexibility when it comes to media transmission protocols.

With the right software, you can stream in any protocol used in production without buying new hardware. The de facto standard, RTMP, is straightforward and widely used across the industry. It’s available on any devices and in all available mobile apps.

SRT is also gaining traction in hardware, but it’s not available everywhere, particularly on legacy devices. However, mobile streaming apps have adopted SRT, making it increasingly available SRT was released as an open-source library and open spec by Haivision in 2017 and was created for reliable delivery over unreliable networks (cellular, long-distance etc). In a world where cross-continent stream transmission is routine, this protocol ensures your producer receives the content at full quality, without interruptions or distortion.

When discussing studio-specific protocols, the first that comes to mind is NDI. If you run a studio, chances are you use NDI cameras and NDI-capable software to get the content and to manipulate it. This new generation IP protocol gave a lot of flexibility and power to software and hardware manufacturers and it’s a new standard for such a setup. Of course, there is mobile software capable of NDI transmission from the devices. We’ll take a look at this case later in this article.

Some less obvious but interesting setups also use Zixi streaming, RIST protocol, and even WebRTC to deliver signals. This is where there’s not so much choice in hardware cameras while there is software to deliver that for sure.

As you may know, our team has been developing a mobile streaming application named Larix Broadcaster for many years. We have perfected it to support all transmission protocols required by the production world and leverage the hardware capabilities provided by device vendors. Of course, we are not alone. There’s a number of apps out there that have some subset of the features needed for professional streaming. Some apps provide a lot of comfort for IRL streamers specifically.

So just like in the case of the hardware described above, the choice of software is yours.

With the hardware and a streaming application installed and configured, your operator can deliver production-grade content from any location with mobile internet or local Wi-Fi.

Real-life cases for replacing cameras with phones

Let’s see what this all means for real-life production teams. We’ll review typical scenarios where a mobile phone can be as powerful as a professional camera. Or even better, let’s dive in and take a closer look.

Use case: IRL one-man band

If you’re an avid Twitch user, you’re likely familiar with this: a creator streams live to an audience while walking outdoors or sitting somewhere, talking and responding to comments. That’s called IRL – in-real-life – streaming. This approach has become a genre of its own, and many creators do that on a regular basis. All you need is an account on a streaming platform and a mobile phone with some simple supporting gear (more on that later). Such use cases wouldn’t even make sense if the streamer was using some camera of any level – it would be just too much gear to carry around. So a phone is the only answer here.

Another niche in this case is live music, especially DJing. A lot of DJs make their live set online, using their phones as a camera. Take a look at The Ultimate Guide To DJ Livestreaming, which shows some of the gear and setup details.

The most popular platforms for solo streamers are Twitch, YouTube Live, Kick, Dacast, and many others. A typical streaming protocol for such cases is RTMP, but there are more services embracing the power of SRT reliable delivery as well.

Use case: remote contributors for remote production

A wide variety of production businesses use mobile phones as the main remote contribution tool for remote production scenarios. This is exactly what they call a REMI production, which we’ve mentioned above. The point is to set a device for streaming into the production facility, send out an operator with this device who’s capable of setting it up on set, start the streaming, and keep it running properly. This case is basically about running the stream “in the wild” in mostly uncontrollable environments.

A remote operator streaming to a studio is the most popular scenario for this use case. The operator is contributing to a live TV production on their own with no need to set up a camera and transmission equipment.

BTW, the boats on the Seine, which we mentioned at the beginning of this article, are a great example of such a use case as well. The engineers set up the phones on those boats, made them stream to a designated production facility and just let them flow down the river.

Speaking about mobile devices that are moving while streaming, we see streamers riding bikes, cars, and other vehicles. A great example of that is the “Ride for Dad” charity event that takes place in Canada every year. It involves a huge number of bikers riding for a cause to raise money for this charity. As part of that event, a production team of enthusiasts mounted mobile phones and made a remote production setup for that.

How about going above everyone? Grabyo made a remote production for Trail De Haute Provence in the Alps which involved mobile phones and drones for capturing real-time content of the runners and streaming the event live.

Use case: static setup for moving pictures

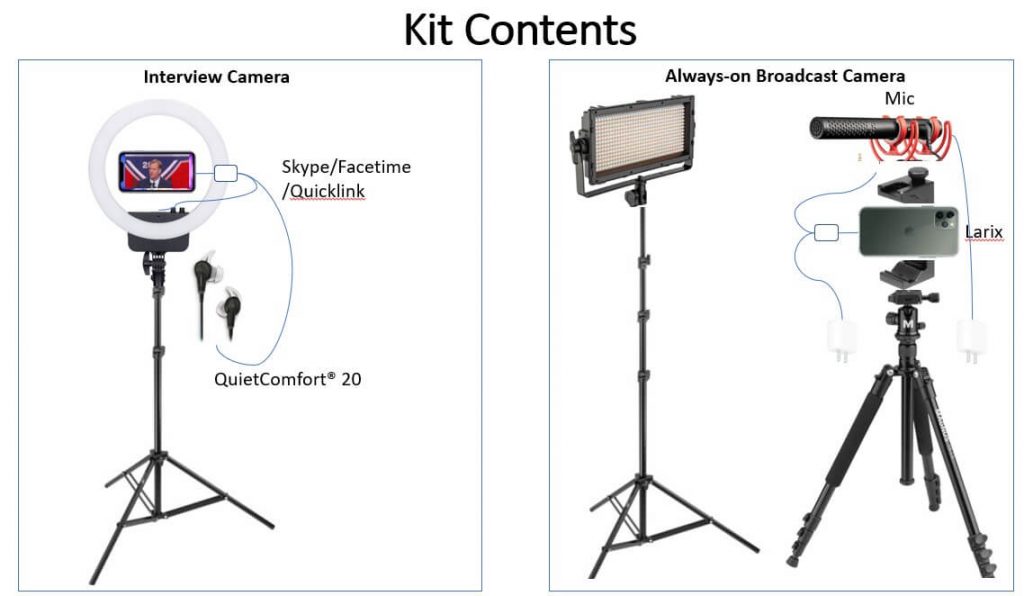

The 2020 NFL virtual draft mentioned at the beginning of this article was also a great example of a remote contribution setup where a simple piece of hardware with the right software allowed for the production of a production-grade event smoothly. They used pre-installed Larix on iPhones with SRT protocol to deliver the stream. The devices were using home Wi-Fi and public internet for delivery, so they preferred this protocol to increase the reliability. The production facility used Haivision hardware to receive SRT streams.

Later that year, the same approach was used by the MLB in their own draft using mobile kits and RTMP for transmission.

By the way, you can take a look at Grabyo’s guide for producing a draft show for sports franchises to see examples of production setups for studio events as well. Different hardware but still, phones are used as sources of content.

Speaking of sports, our customers often use static mobile devices instead of regular cameras for streaming production of sports events. We hear from numerous users that they set up mobile phones on tripods or attach them on walls to capture basketball, volleyball, football, or other sports events from multiple angles. The resulting streams are then processed via live switching software to provide live output and deliver it to public streaming services or some designated web sites of respective sports leagues or colleges. This allows delivering the events to those who couldn’t attend in person. This is especially important for children’s sports where not all parents are able to be there, especially for events in different cities.

One important technical note on the sync-up in multi-device setup.

When you’re streaming the same event from multiple angles, when a camera is being switched, it’s important that all sources of content are aligned in time. There may be a difference in frame time between devices due to protocol or encoding delays. This means that if you switch between sources showing the same motion, this motion will either repeat or shift forward in time, and the difference may be up to a couple of seconds.

To overcome this for RTMP and SRT protocols inputs, we recommend using SEI NTP sync up. It allows devices to align and be processed on the same time scale. So if your source is a mobile app and your recipient hardware or software supports that, you can enhance your production significantly.

Obviously, mobile devices can be used in more traditional circumstances than riding bikes or climbing mountains.

A local studio with guests talking to each other is a very common live production case. Basically, it’s a good old local TV studio setup, but with mobile devices instead of traditional hardware. In this case, you can use more traditional protocols, such as NDI. This is a protocol designed specifically for production environments in the same local network, so all content sources and production software are aligned with each other with real-time latency and accuracy. So if your mobile app supports NDI output, you can definitely make your mobile phones as part of this setup.

Use case: a reliable backup and addition

As we mentioned at the beginning of this article, phones with proper software can replace low- and mid-tier cameras. But of course, they are not all-powerful, and professional gear is still on top when it comes to professional production.

However, even in this case, phones can be a great backup tool.

First of all, hardware can fail unexpectedly, and if any of your cameras go out on set, your staff’s mobile devices can be quickly set up for streaming. It’s always good to have something instead of nothing, right?

Also, these days there’s no such thing as “too much content.” Why not set some mobile devices as additional sources of content besides traditional cameras and transmitters? Take a look at how a professional production crew used phones and tablets as streaming sources within a huge wireless setup at an American Rally Association competition on the Upper Peninsula of Michigan in the wilderness.

Some tips

Having our mobile software used in various use cases, we’ve collected some tips and tricks on how to make streaming from mobile devices easier and better.

Using a phone on its own is fun, but it works even better with some additional gear

Hold it: tripods, gimbals, and more. Holding the phone in your hand might not be convenient, so a number of holding devices can be used, starting with a simple selfie stick, some GoPro mount or a tripod, up to using an advanced gimbal to film on the go with good stabilization.

One more important thing is power management. If you stream for more than an hour, any on-board battery will die. That’s why you need to plug it into either a power bank or some stationary power supply from a plug in the wall or a generator. Some streaming options will require more power than others, such as using the HEVC codec or several overlays in your stream; they all consume resources, so you need to consider that. Also, a screen being turned on definitely adds to the consumption. Some of the streaming apps can run in the background, so you can turn off the screen to reduce the power consumption.

Temperature control. When you’re streaming on the street in hot weather or in direct sunlight, your phone might get hot. And if you don’t handle the cooling, it will just shut down. So try to avoid direct sunlight at least. Another option to cool down the device would be to use… well, a cooler. There are some coolers out there that can do the job pretty well.

Network management. If you’re streaming via the cellular network, you may face some congestion issues, especially if you’re streaming from a crowded area. In order to address that, you may consider using a portable router, preferably with some bonding solution on board. Bonding allows streamers to be less dependent on a single cellular provider and get a more reliable uplink.

Test and then test again. If you’re new to mobile streaming, of course you need to test this approach first before using it in production. Try different phones to see which picture is better. Try different software to see which has all the features you need. Run long tests like 6-10 hours straight to see how your whole setup behaves under stress. The goal is to push your bundle to the real limit, so you don’t bump into it later in the middle of your streaming event.

Try it now

Now it’s up to you to decide if some of the cases above are worth using mobile devices in place of traditional cameras. Pick up any devices that you have at your disposal and test them as described above. Run some simple event, make adjustments to the setup, and just start using it full scale. This will save capital expenses for you, as well as provide a good return for your efforts in a very short time.

As we mentioned, our team creates and maintains the Larix Broadcaster mobile application to power any mobile phone or tablet to the standard of a professional camera. You can download it for both Android and iOS. The app supports all hardware features provided and allows streaming content via RTMP, SRT, NDI, Zixi, WebRTC, RIST, and RTSP. So whatever you want to stream, Larix will process and deliver it. You definitely need to try it as part of your mobile streaming setup.

In addition to Larix Broadcaster, we created Larix Tuner – a web service for remote control and management of devices running the Broadcaster app. It’s very useful when you have a fleet of devices and you want to manage them all from a single web service.

You can contact our team if you have any questions about the features mentioned in this article or any other inquiries.