Softvelum continues to enhance accessibility of content delivery with the latest update to Nimble Streamer voice recognition: integration with the Speechmatics speech recognition service. This powerful addition expands the existing feature set that enables real-time transcription and translation of live streams into subtitles.

Previously, Nimble Streamer introduced native AI-based speech recognition with Whisper.cpp engine. Now, users can also choose Speechmatics, a robust cloud-based service known for its accuracy, multilingual support, and adaptability to various accents and dialects. This provides more flexibility for streamers, broadcasters, and service providers who require highly accurate, scalable transcription and translation solutions.

Key benefits of Speechmatics integration

- High accuracy: Speechmatics has one of the industry’s leading engines for real-time STT (speech-to-text) transcription and translation.

- Scalable architecture: offload the recognition process to Speechmatics’ infrastructure to reduce the load on your own servers.

This new option is ideal for live broadcasters looking for reliable, high-performance captioning with minimal setup.

Notice that Speechmatics service pricing applies to the transcription process, you need to refer to Speechmatics for exact quote.

Softvelum is not affiliated with Speechmatics.

Now, let’s see how to enable Speechmatics in Nimble Streamer.

Prerequisites

In order to enable and set up Speechmatics transcribing in Nimble Streamer, you need to have the following.

- WMSPanel account with active subscription.

- Nimble Streamer is installed on an Ubuntu 24.04 and registered in WMSPanel. Other OSes and versions will be supported later.

- Live Transcoder is installed and its license is activated and registered on your Nimble Streamer instance. You can do it easily via panel UI.

- Addenda license is activated and registered on your Nimble Streamer instance.

In order to add Nimble Transcriber transcription engine, run the following command and restart Nimble instance as shown.

sudo apt install nimble-transcriber

sudo service nimble restartThat’s it, you may proceed to the setup.

Connect Speechmatics with Nimble

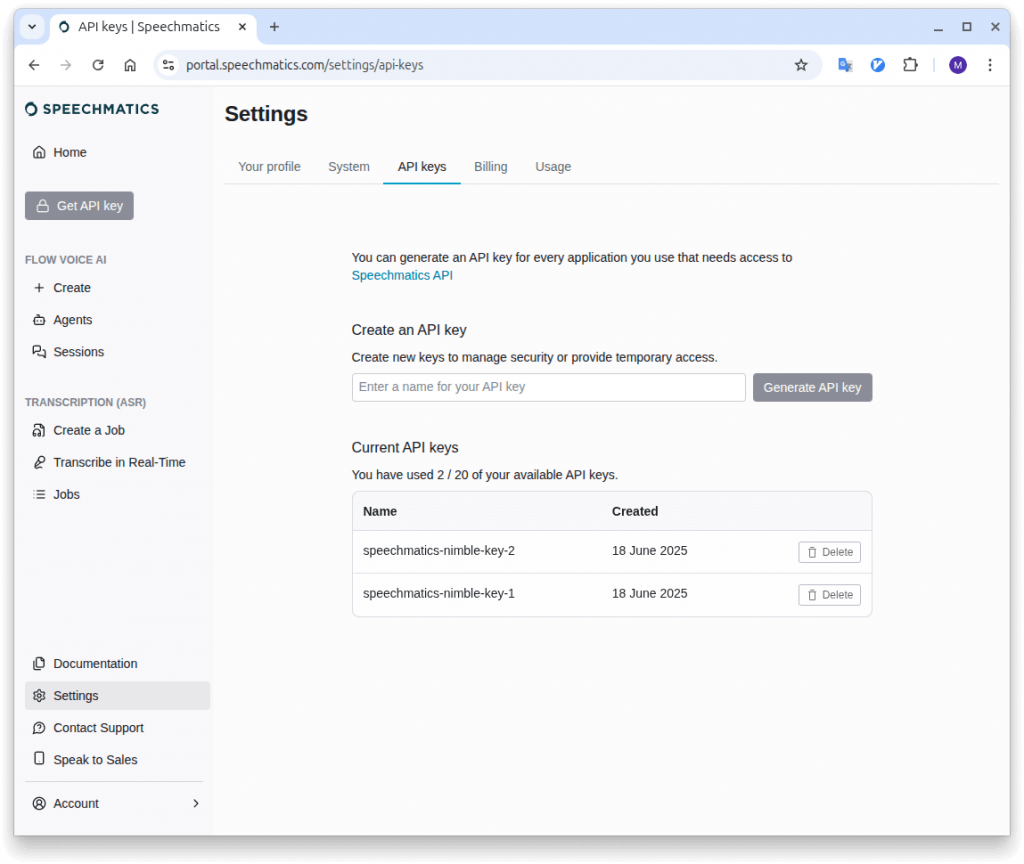

We assume you have an active Speechmatics account. Sign into it and proceed to Settings -> API Keys page.

Generate API key and copy the key value for further usage.

Also, get API endpoint URL on docs page.

There are two ways to enable Speechmatics for Nimble instance – server-wide setting and per-stream setting.

Server-wide setting for Nimble

Add the following parameters in nimble.conf file:

transcriber_type = speechmatics

speechmatics_api_url = wss://eu2.rt.speechmatics.com/v2

speechmatics_api_key = <copied-key-value>

speechmatics_language = <lang_code>speechmatics_language parameter is optional and it defines the language that will be recognized in all incoming streams on the server. By default, it’s “en”, i.e English. If a stream has a different language than defined in this parameter, then it will not be recognized.

Restart the Nimble instance to make the parameter work:

sudo service nimble restartPer-stream setting for Nimble

If you want to define language per application or stream, please refer to section “Specifying per-stream languages” below.

Enable recognition for live streams in Nimble

Once your Nimble instance has the speech recognition package, and Speechmatics parameters are set, you may enable transcription for that server in general as well as for any particular live stream.

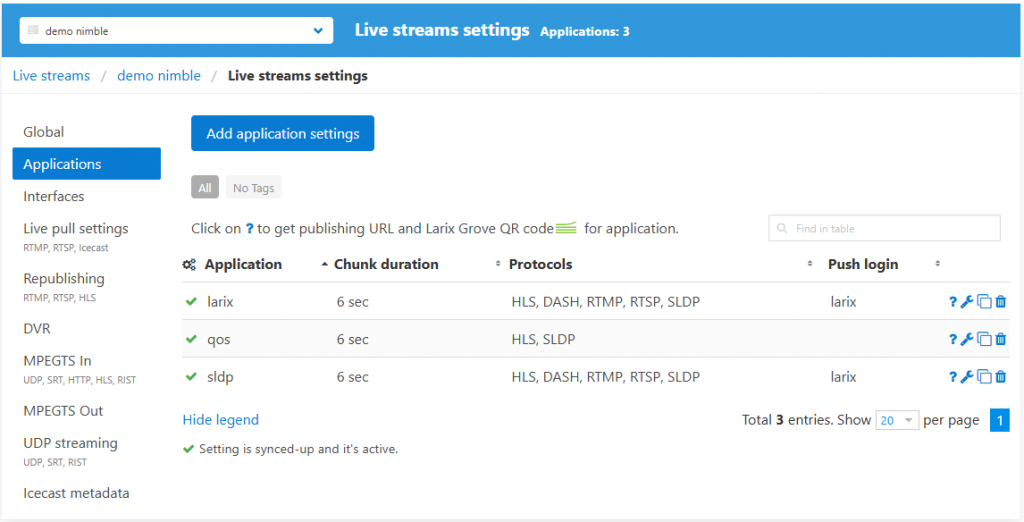

To enable transcription for a particular set of streams, go to Nimble Streamer top menu, click on Live Streams Settings and select the server where you want to enable transcription.

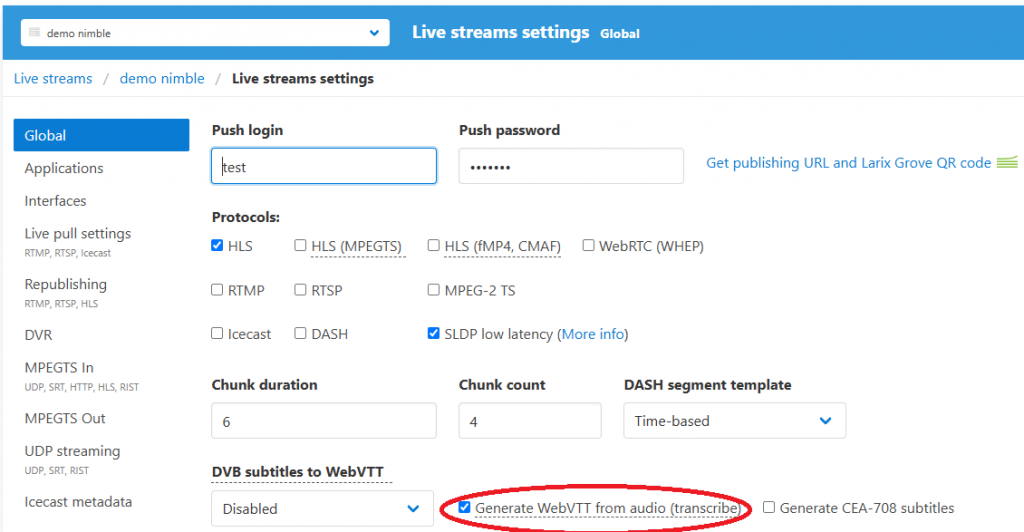

If you’d like to enable transcription on the server level, open Global tab, check Generate WebVTT for audio checkbox and save settings.

You may also select a particular output application where you’d like to enable transcription, or create a new app setting. The same Generate WebVTT for audio setting is checked to enable the feature.

You may also enable the generation of CEA-708 subtitles, please read this article for more details.

After you apply all settings, you need to re-start the input stream. Once the re-started input is picked up by Nimble instance, the output HLS stream will have WebVTT subtitles carrying the transcribed and translated closed captions.

Specifying per-stream languages for transcription and translation

As was mentioned for speechmatics_language parameter, by default the language is defined on the server level. If you’d like to apply different languages besides the default one, you can specify full list of apps and streams with respective languages in a separate config. Use this parameter to define its location:

transcriber_config_path = /etc/nimble/transcriber-config.jsonThe content would be as follows.

{

"speechmatics_params" : [

{

"api_url": "wss://eu2.rt.speechmatics.com/v2",

"api_key": "<your_api_key>"

},

{"app":"tv_source_1", "stream":"stream_1", "lang":"en"},

{"app":"tv_source_2", "lang":"es"},

{"app":"tv_source_3", "stream":"inter_stream", "lang":"en", "target_langs": "es,fr"},

{"app":"live", "stream":"med-conference", "lang":"en", "target_langs": "en", "transcription_config": { "operating_point": "enhanced", "domain": "medical"} }

]

}The following parameters can be used:

- “app” defines Nimble streamer application

- “stream” defines the respective stream. You may skip it, in this case the languages’ settings will be applied to all streams within the specified app.

- “lang” is the two-letter language code.

- “target_langs” is a list of language codes for automatic translation. If it’s not specified, no translation will be added.

- “transcription_config” is a set of parameters, their description can be found in Speechmatics docs.

Changes can be applied in this file without Nimble re-start. However, you need to re-start the input stream in order to transcribe it with the new language.

Using multiple voice recognition engines

You may combine multiple ASR engines on the same Nimble instance if you need some streams to be processed by some other AI model or service.

Please let us know if you have any questions, issues or suggestion for our voice recognition feature set.